Prompt Engineering

Do you really think you know Prompt Engineering?

Today we will discuss in detail the fundamentals of how exactly prompts are used to get the most out of your LLM(Large Language Model)

What are prompts?

Prompts involve instruction and context passed to a language model to achieve a task

So, that’s it? That’s what we call “Prompt Engineering”!

Answer is NO my friend, Prompt Engineering is a process of creating a set of prompts or questions, that are used to guide the user towards a desired outcome. It is an effective tool for designers/developers to create user experience that are easy to use and intuitive, this method is often used in interactive design and software development , as it allow user to easily understand how to interact with a system

“Prompt Engineering” is the practice of guiding the language model with a clear, detailed, well-defined, and optimized prompt in order to achieve a desired output.

There are two basic elements of a prompt. The language model needs a user-supplied instruction to generate a response. In other words, when a user provides an instruction, the language model produces a response.

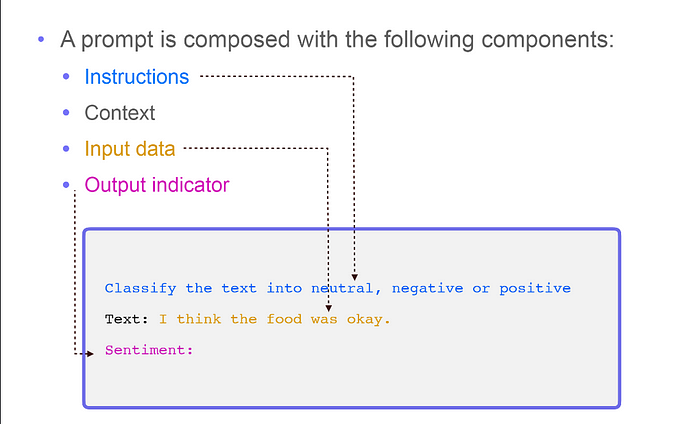

Let’s see how Prompt is composed

- Instructions: This is the section where the task description is expressed. The task to be done must be clearly stated.

- Context: A task can be understood differently depending on its context. For this reason, providing the command without its context can cause the language model to output something other than what is expected.

- Input data: Indicates which and what kind of data the command will be executed on. Presenting it clearly to the language model in a structured format increases the quality of the response.

- Output indicator: This is an indicator of the expected output. Here, what the expected output is can be defined structurally, so that output in a certain format can be produced.

You might don't know But there are Types in Prompt

- Instruction Prompting

- Role Prompting

- Standard Prompting

Instruction Prompting

Instruction Prompting involves providing clear, step-by-step instructions to guide the user in completing a task or responding to a query. This type of prompting ensures that the user understands what needs to be done and how to do it effectively

Prompt:

Read the following sales email. Remove any personally identifiable information (PII),

and replace it with the appropriate placeholder. For example, replace the name "John Doe"

with "[NAME]".

Hi John,

I'm writing to you because I noticed you recently purchased a new car. I'm a salesperson

at a local dealership (Cheap Dealz), and I wanted to let you know that we have a great deal on a new

car. If you're interested, please let me know.

Thanks,

Jimmy Smith

Phone: 410-805-2345

Email: [object Object]response:

Hi [NAME],

I'm writing to you because I noticed you recently purchased a new car. I'm a salesperson

at a local dealership ([DEALERSHIP]), and I wanted to let you know that we have a great deal on a new

car. If you're interested, please let me know.

Thanks,

[SALESPERSON]

Phone: [PHONE NUMBER]

Email: [EMAIL ADDRESS]Role Prompting

Role Prompting involves defining specific roles for users and providing them with role-specific guidelines and instructions. This approach leverages the user’s role to tailor prompts that enhance their performance and ensure that they carry out their responsibilities effectively.

Role prompting is most often used to style text. This involves asking the AI to pretend to be a certain person, or act in a certain way, thus modifying how it writes based on the assigned role. This can be used to change the tone, style, and even the depth of the information presented

Prompt : You are a food critic writing for the Michelin Guide. Write a review of

[random pizza place].response : "Located in the heart of [random city], [random pizza place] is a popular spot

for pizza lovers. Upon entering, the warm, inviting atmosphere and the smell

of freshly-baked pizza will make you feel right at home. The menu offers a

wide selection of classic pizzas, as well as some unique and creative options.

The crust is perfectly crispy and the toppings are always fresh and flavorful.

The service is friendly and attentive, and the prices are reasonable. Whether

you’re looking for a quick bite or a leisurely dinner, [random pizza place] is

sure to satisfy your pizza cravings."Standard Prompting

Standard Prompting involves creating and implementing a set of standard prompts that can be used consistently across various interactions and scenarios. This ensures uniformity and efficiency in responses, making it easier to manage and analyze user interactions.

Settings to keep in mind

- When prompting a language model you should keep in mind a few settings

- You can get very different results with prompts when using different settings

- One important setting is controlling how deterministic the model is when generating completion for prompts

- Temperature and top_p are two important parameters to keep in mind

- Generally, keep these low if you are looking for exact answers

- keep them high if you are looking for more diverse response

- basically more the temperature more creative your output will be

Let’s dive deeper and see more advance technique’s

What is Showing Examples?

Few-shot prompting, also known as showing examples or “shots,” is a strategy where you provide the AI with examples of what you want it to do. This helps the AI learn from these examples.

How It Works

- Provide Examples: Show the model a few examples of the task. For instance, if you’re classifying customer feedback as positive or negative, give three examples of each.

- New Input: Give the model a new piece of feedback to classify, like “It doesn’t work!”

- Model Learns: The model looks at the examples and classifies the new feedback based on the patterns it noticed.

Importance of Structure

The structure of your examples matters. If your examples are in a clear input: classification format, the model will generate a simple output, such as a single word (“negative”), instead of a full sentence (“this review is negative”)

Importance of Structure in Few-Shot Prompting

Few-shot prompting is particularly useful when you need the output to follow a specific format that’s hard to describe directly to the model. Providing structured examples can help the model understand the required format and produce the correct output.

Example Use Case

Imagine you’re conducting an economic analysis and need a list of names and occupations from local newspaper articles. You want the model to output this information in the “First Last [OCCUPATION]” format. To achieve this, you can use few-shot prompting by providing the model with examples.

Steps to Create Structured Prompts

- Identify the Task: You need the model to extract names and occupations from text.

- Create Examples: Show the model a few examples in the desired format.

- Example 1: “John Smith [Carpenter]”

- Example 2: “Jane Doe [Teacher]”

- Example 3: “Alice Johnson [Doctor]”

Provide a New Input: Give the model a new article to analyze.

Generate Output: The model uses the examples to produce the output in the correct format.

Variants of Shot Prompting

The term “shot” refers to “example” in the context of prompting AI models. Aside from few-shot prompting, there are two other types of shot prompting: zero-shot and one-shot. The difference between these variants is the number of examples you provide to the model.

Zero-Shot Prompting

Zero-shot prompting is the simplest form. It involves giving the model a prompt without any examples and asking it to generate a response. This relies entirely on the model’s pre-existing knowledge and understanding.

Example of a zero-shot prompt:

- Prompt: “Translate ‘Hello’ to French.”

- Response: “Bonjour.”

One-Shot Prompting

One-shot prompting involves providing the model with a single example to guide its response. This helps the model understand the context and format better than zero-shot prompting.

Example of a one-shot prompt:

- Example: “Add 2+2: 4”

- Prompt: “Add 3+5:”

- Response: “8”

Few-Shot Prompting

Few-shot prompting involves giving the model two or more examples. This approach provides the model with a clearer understanding of the task, improving its accuracy and relevance in generating responses.

Example of a few-shot prompt:

- Example 1: “Add 2+2: 4”

- Example 2: “Add 3+5: 8”

- Prompt: “Add 4+6:”

- Response: “10”

By using different numbers of examples, you can guide the model more effectively, ensuring it understands and responds to prompts accurately.

Combining Techniques in Prompting

In our exploration of prompts, we’ve encountered various formats and levels of complexity. These prompts can incorporate context, instructions, and multiple input-output examples. However, exploring them separately only scratches the surface of their potential. By combining different prompting techniques, we can create more robust and effective prompts.

Example: Context, Instruction, and Few-Shot Prompting

Let’s examine a prompt that integrates context, instruction, and few-shot prompting to illustrate its power:

Context: Imagine we are tasked with classifying tweets on Twitter. The context is set by explaining the task of categorizing tweets into positive or negative sentiments based on their content.

Instruction: The prompt includes specific instructions such as “Make sure to classify the last tweet correctly.” This directs the AI on what action to take with the provided information.

Few-Shot Prompting: The prompt also includes two examples: one of a positive tweet and another of a negative tweet. These examples serve as instances of the task that the model can learn from.

Combined Prompt:

- Context: “You are analyzing tweets on Twitter to classify their sentiment (positive or negative).”

- Instruction: “Make sure to classify the last tweet correctly.”

- Few-Shot Examples:

- Example 1: “I love this product! It’s so useful and innovative.”

- Example 2: “This service is terrible. I would not recommend it to anyone.”

Task: The AI is expected to use the provided context, follow the instruction, and apply the lessons learned from the few-shot examples to accurately classify the sentiment of the final tweet.

Benefits of Combining Techniques

- Enhanced Understanding: Context provides background, instructions give specific tasks, and few-shot examples offer concrete instances for the model to learn from, improving its comprehension.

- Improved Accuracy: By integrating these elements, prompts become more precise and tailored to the desired outcome, leading to more accurate responses from the AI.

- Versatility: Combining techniques allows for flexibility in designing prompts that can address a wide range of tasks and requirements effectively.

By harnessing the synergy of context, instruction, and few-shot prompting, we can create prompts that leverage the full potential of AI models, enhancing their capability to perform complex tasks with accuracy and intelligence.

Conclusion

In summary, prompts serve as powerful tools in shaping AI behavior and performance. By understanding how to craft and deploy prompts effectively — whether to instruct, guide, or train — we unlock the full potential of AI to tackle real-world challenges with intelligence and precision. As we continue to explore and refine these techniques, the horizon of possibilities for AI applications expands, promising a future where intelligent systems contribute significantly to our daily lives and beyond.

Feedbacks are welcome, make sure you follow and subscribe to newsletter so you never miss on any NEW CUTTING EDGE TECH UPDATE